SonarQube on Windows and MS SQL

Introduction

In the following post we will see what is necessary to install and configure SonarQube 5.4. We will also see how to setup some basic security concerns by making our SonarQube part of our LDAP infrastructure and map security groups to roles.

I’m sure that there are plenty of guides out there, but what I found most annoying meanwhile reading some of them, is that all of them do give several things for granted. Also the information is segmented and not easy to find. I will try in this post to cover even the basic steps that can save you hours of struggling. I’m going to install SonarQube on Windows platform using MS SQL as my database of choice, you can also try Couchbase. Both of these services in my case are going to reside on the same machine, but nothing limits you to use multiple machines for your setup.

Prerequisites

Java runtime is the main prerequisite. Although it works with Java 7, my advice is to install and use JDK 8. At the moment of writing the latest version for my platform is jdk-8u77-windows-x64.exe.

For what concerns MS SQL versions, 2008, 2012 and 2014 are supported. Also the SQL Express is supported. Your SQL server needs to support case-sensitive (CS) and accent-sensitive (AS) collation.

Installing the database

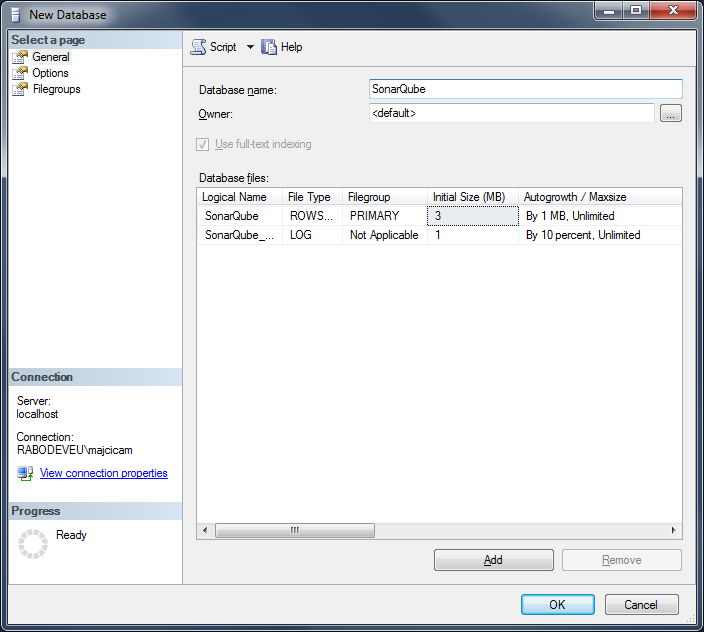

After you installed your MS SQL version of choice, you need to create a database. Add a new database and name it SonarQube.

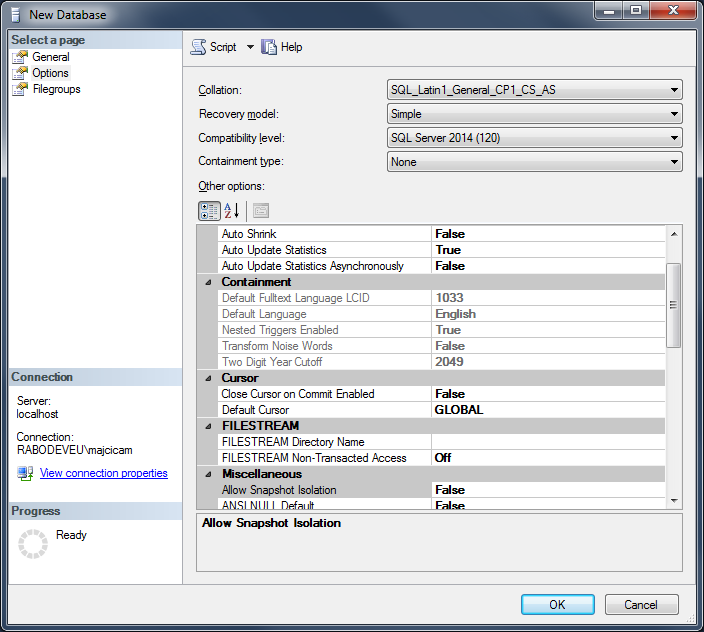

Now the important step. In the Options page you need to specify the right collation. It needs to be one of the case-sensitive (CS) and accent-sensitive (AS) collations. In my case I will go for SQL_Latin1_General_CP1_CS_AS.

Once that is set, click OK and create the new database.

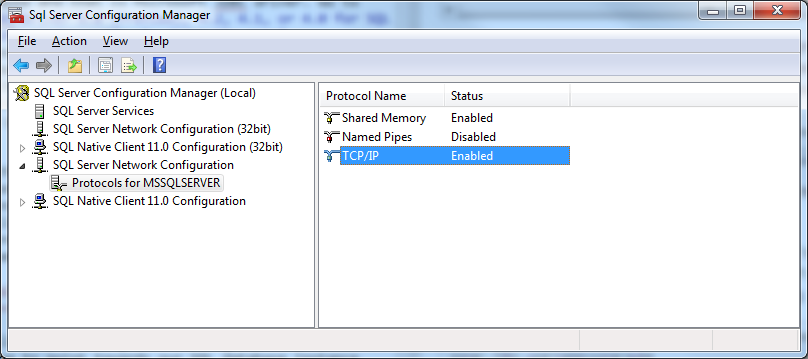

After the database is created, we need to make sure that the TCP/IP protocol is enabled for our SQL instance. Open the Sql Server Configuration Manager and, in the console pane, expand SQL Server Network Configuration. Choose the Protocols for your instance. In the details pane, right-click TCP/IP, and then click Enable. Once done, restart the service. A detailed guide is available on Technet at Enable TCP/IP Network Protocol for SQL Server.

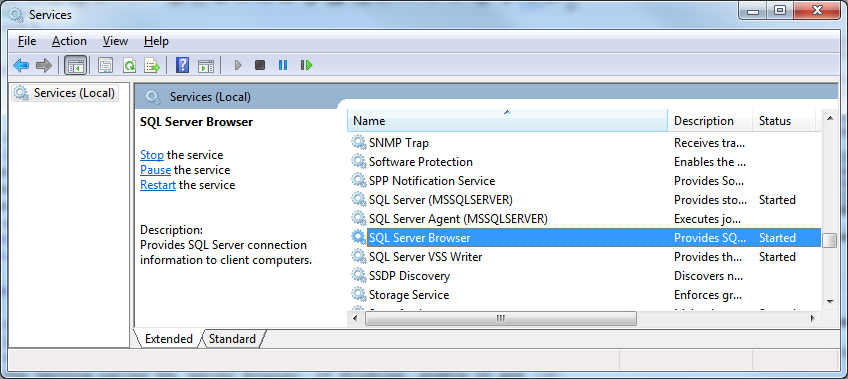

Last but not least, make sure that SQL Server Browser service is running. Often it is disabled by default, however for the JDBC driver to work, it needs to be enabled and running. Open the Services management console and find the Service called SQL Server Browser. If disabled, enable it and start the service.

That’s all for now for what database concerns.

Installing SonarQube

Before we start, make sure that the latest JDK is installed, then download the SonarQube installation file from SonarQube website. For this demo I will be using the latest available version of SonarQube at the moment of writing and that is 5.4. After I downloaded sonarqube-5.4.zip I will extract it’s content in a folder of my choice and that is D:\SonarQube.

There is another important file we need to get and set before we can continue configuring SonarQube and that is Microsoft JDBC driver. Go to Download the Microsoft JDBC Driver 6.0 (Preview), 4.2, 4.1, or 4.0 for SQL Server and download sqljdbc_4.2.6420.100_enu.tar.gz file. Once done, open the just downloaded file with compression tool of your choice and extract all of it’s content in a temporary folder. Get into sqljdbc_4.2\enu\auth\x64 folder and copy the only file present in that path, sqljdbc_auth.dll and paste it into your System32 directory, usually C:\Windows\System32.

Now we are ready to start the configuration. Open the main configuration file of SonarQube called sonar.properties. You can find it in the conf folder in your SonarQube installation path. Open it with the editor of your choice and search for the line reporting ‘Microsoft SQLServer 2008/2012/2014 and SQL Azure’. Under that line you should see a the following configuration item that is commented out:

#sonar.jdbc.url=jdbc:sqlserver://localhost;databaseName=sonar;integratedSecurity=true

We need to uncomment this line by removing the hash sign in front of it and change the connection string to point towards our SQL database instance (the one we create earlier).

Following, an example of the connection string using a name instance of SQL:

sonar.jdbc.url=jdbc:sqlserver://localhost;databaseName=SonarQube;instanceName=DEV_01;integratedSecurity=true

If you are using the default instance, you can simply omit the instanceName=DEV_01 from your connection string.

Also you can see I’ve set to use the integrated security. If you want to use SQL Authentication, remove the integratedSecurity=true part and specify the credentials as separate configuration items under your connection string (also create users in SQL accordingly and map the newly create user to dbo schema).

sonar.jdbc.url=jdbc:sqlserver://localhost;databaseName=SonarQube

sonar.jdbc.username=sonarqube

sonar.jdbc.password=mypassword

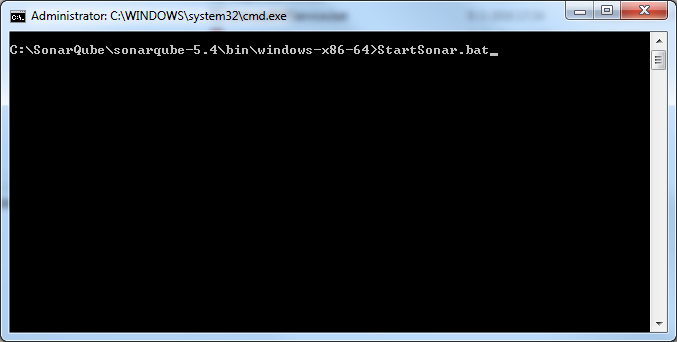

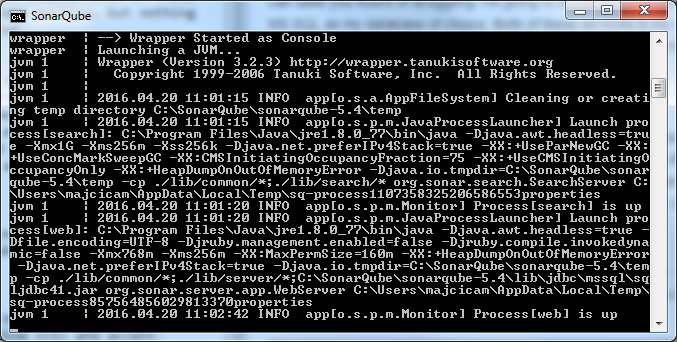

Once the connection string is set, save the configuration file and try starting SonarQube. Open the command prompt and move to ...\bin\windows-x86-64 folder and execute StartSonar.bat

If everything is set right, you should see a message in the console INFO app[o.s.p.m.Monitor] Process[web] is up.

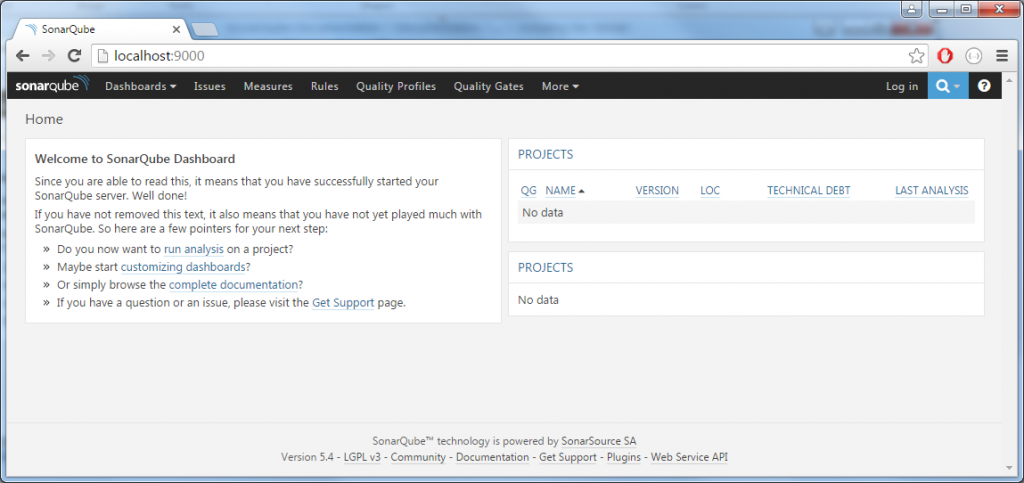

Now you can open the web browser of your choice and head to http://localhost:9000. A welcome page on SonarQube should be shown.

If the page loaded, congratulations, SonarQube is running correctly on your machine.

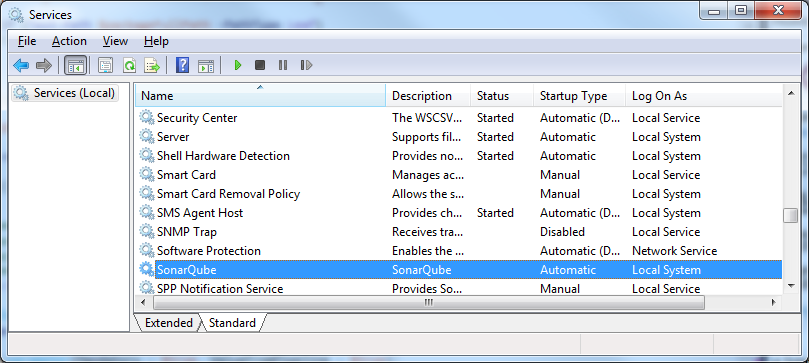

What is left to do is to create a service that will run SonarQube. Stop the current execution with a CTRL+C and terminate the batch job. In the same bin folder where StartSonar.bat is located, you will find InstallNTService.bat. Execute the just mentioned batch file and you should receive the wrapper | SonarQube installed. message. This means that a new service is created. Check your services management console and you should find a service called SonarQube:

As you can see from the picture, service is created but not started.

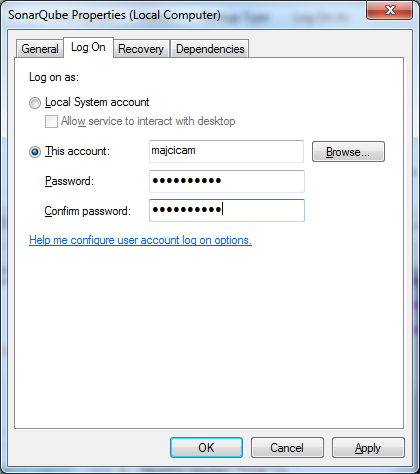

By default, the “Local System” account is used to execute the SonarQube service. If this account doesn’t have the required permission to create some directories/files in the SonarQube installation directory (which is the case by default on recent Windows versions), the execution of the SonarQube service will fail. In such case, the SonarQube service must be configured to run in the context of a suitable account.

Right click on the SonarQube service and choose properties then move to Log On tab choose “This account”, and select an account that can read/write the folder in which SonarQube is installed. Hopefully you will have a specific service account created for this purpose.

Now, you can start the service manually or by launching StartNTService.bat.

Services configuration

SonarQube is the only web application running on my server, so I will move it from the port 9000 to the default 80. To do so, edit the sonar.properties configuration file and find the #sonar.web.port=9000 comment line. Uncomment it and change port value to 80, sonar.web.port=80.

After this change you need to restart your SonarQube service and try to reach your localhost in the browser. If all went fine you will not need to specify the port at the end of the address.

SonarQube behind a proxy

I wrote in the past time numerous post about running services and applications behind a proxy. SonarQube will not be an exception to that practice. You may wonder why SonarQube should have access to internet and my answer is, plug-ins. Plug-ins are essential to SonarQube and installing and updating them is easiest done via Update Center, a functionality integrated in the administrative portal. In order for it to work, SonarQube needs to be able to access the internet. In case you are behind a proxy, you need to modify again sonar.properties configuration file.

Search for #sonar.web.javaAdditionalOpts= configuration line and modify it by specifying http, https proxy host and port:

sonar.web.javaAdditionalOpts=-Dhttp.proxyHost=swg.myProxy.com -Dhttp.proxyPort=8080 -Dhttps.proxyHost=swg.myProxy.com -Dhttps.proxyPort=8080

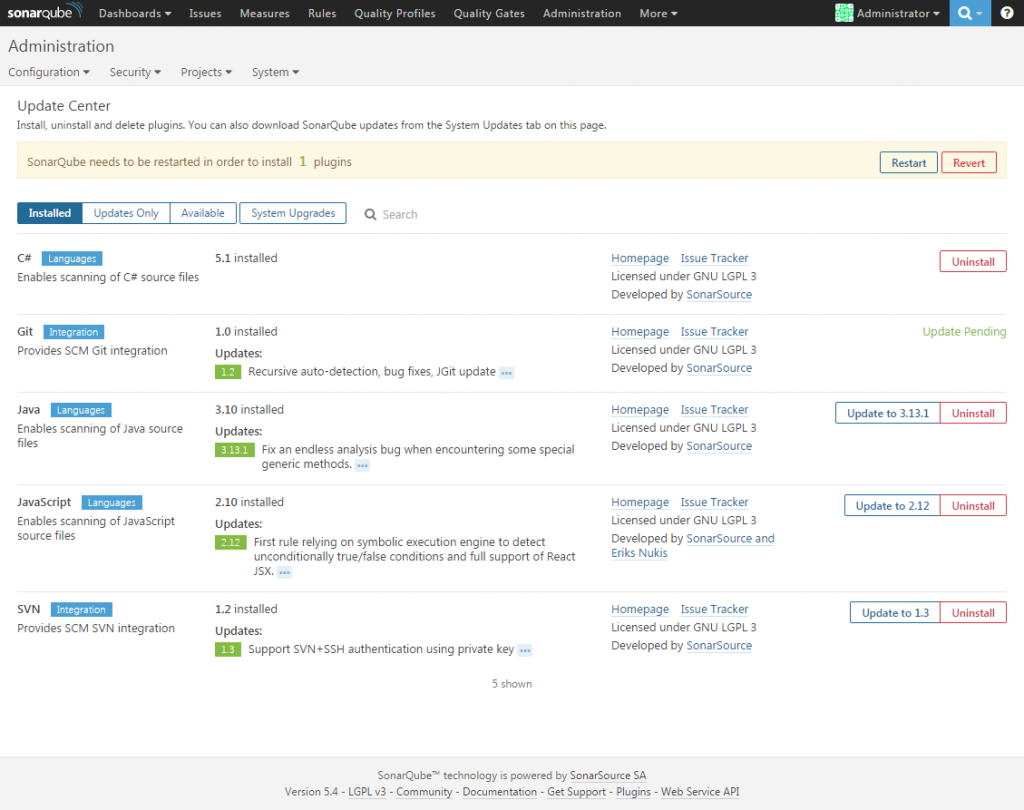

Restart the service and try the Update Center. Open SonarQube web page and log in with the default admin user (password is also admin). Click on the administration menu item and then, in the sub-menu, choose System -> Update Center. Check if the updates are retrieved and try to update one of the plug-ins installed by default, like C#. If all goes well you will see the following screen

Once the plug-in is installed you will see a button in the notification message that offers to restart the server for you. In my case it never worked and after choosing this option my server stopped replaying. In order to get it back online, you need to manually restart the service.

This problem is addressed in SONAR-7422 and it is a recognized bug. It should be fixed in the SonarQube version 5.6.

If you where able to install or update plug-in correctly, then your proxy settings where picked up fine.

Securing SonarQube connection

You can setup SonarQube to run on a https secure connection. It natively supports the SSL certificates however it is not advised to configure it. Using a reverse proxy infrastructure is the recommended way to set up your SonarQube installation on production environments which need to be highly secured. This allows to fully master all the security parameters that you want. I will not dig into details on how to set up IIS to leverage the reverse proxy setup. If interested in this, you can read the following blog post on Jesse Houwing’s blog, Configure SSL for SonarQube on Windows. It will guide you in setting up IIS that will act as a proxy for the secure calls towards your SonarQube server.

Security configuration

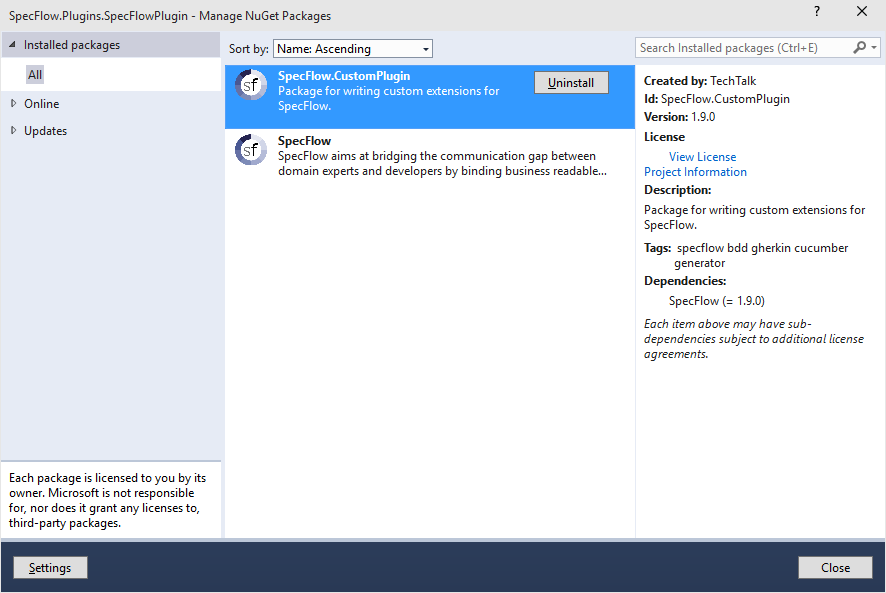

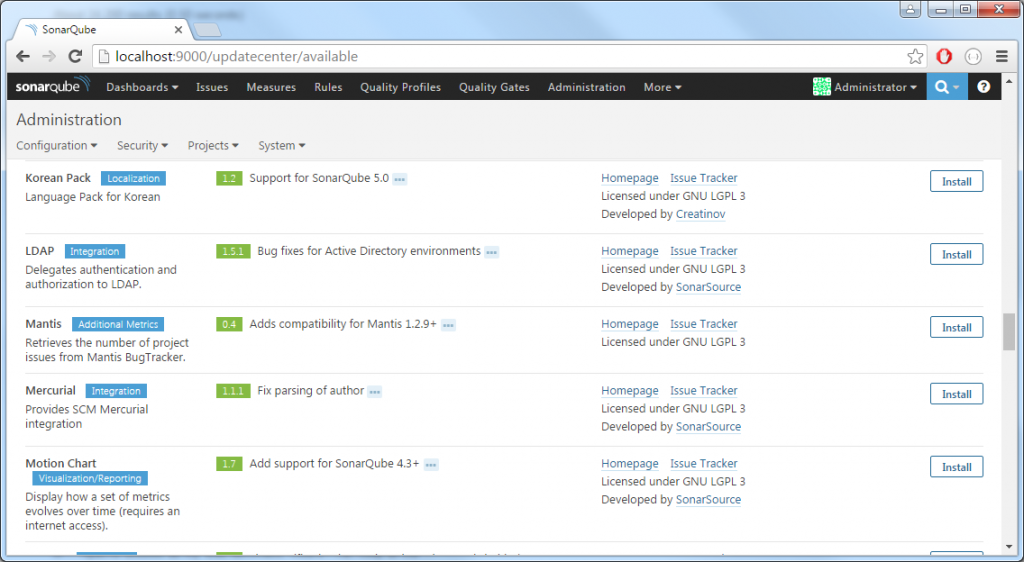

My desire is to integrate the authentication of the SonarQube server with my LDAP (Active Directory domain services). In order to do that, we need to install LDAP plug-in. Locate the LDAP plug-in in update center under available plug-ins and install it.

Before you restart your SonarQube service, open the sonar.properties configuration file and add the following section:

#----------------------------------------------------------------------

# LDAP

sonar.security.realm=LDAP

sonar.forceAuthentication=true

sonar.authenticator.downcase=true

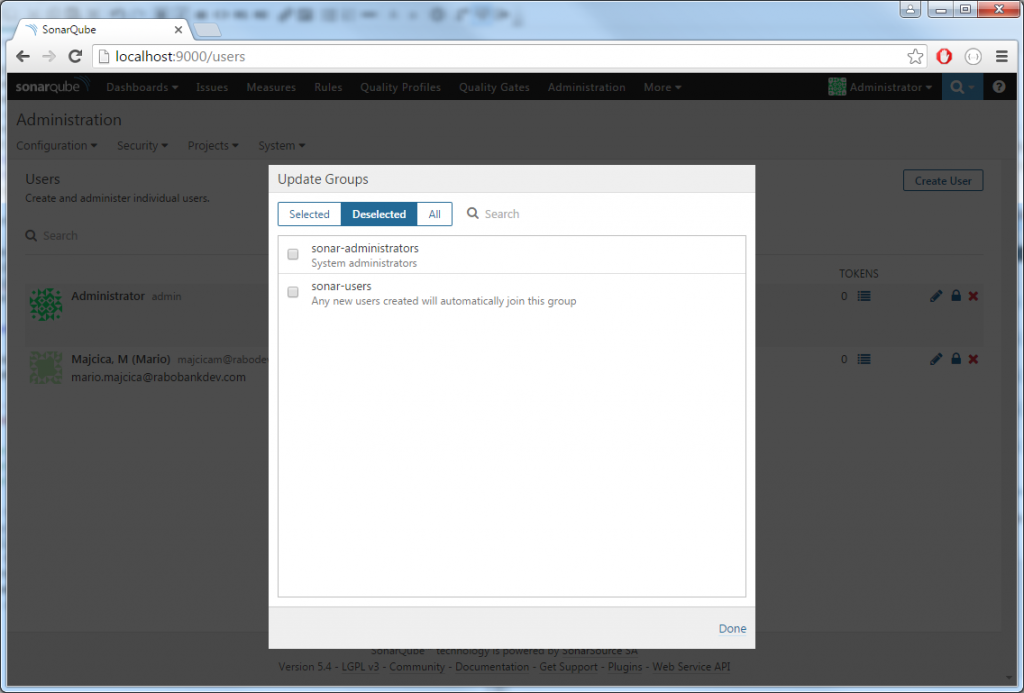

This are the only necessary settings if you are part of the Active Directory domain. Restart the SonarQube service and open the portal. If all went well, SSO kicked in, and you should be logged in with your domain account. Now comes the fun part. Log out, then, log in again as administrator and go to Administration -> Security -> Users screen. You should see in the list the domain account you logged in with. Update groups for this account and assign it to sonar-administrators group.

Now close the browser and reopen it. Surprise, surprise, you are logged in again via your user profile but you do not see Administration option in your menu, as you would expect. Once the LDAP is configured, on each login, the membership information will be retrieved and local settings will be overwritten. Thus no group membership we assigned will be persisted. In this case, LDAP/AD becomes the one and only place to manage group membership. In order to do so, we need to create a security group in AD and map it in the SonarQube Security Groups.

Before we create a new group in the SonarQube Security Groups we need to get the groups precise name. Group names are case sensitive and do require the domain to be specified. This is not something we can guess but we can extract it from our log file.

Add your user to the AD security group of choice. Edit sonar.properties configuration file again and set the logging level to a higher setting. In order to do so, find the #sonar.log.level=INFO line, uncomment it and change the level from INFO to DEBUG. You line should now look like sonar.log.level=DEBUG. Restart the service and open the portal.

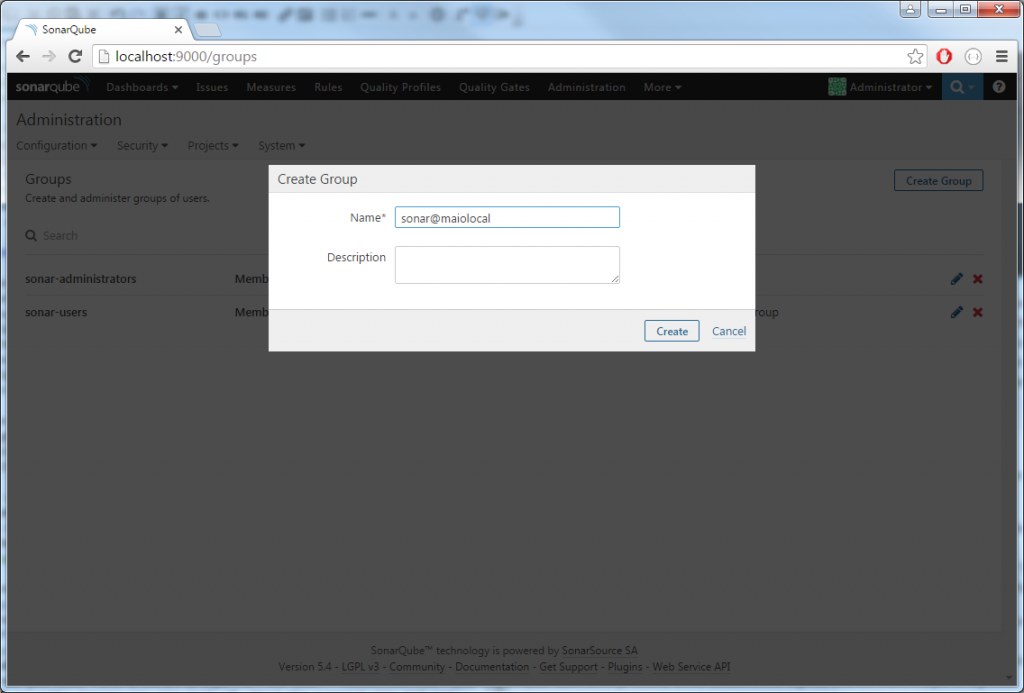

If you are successfully logged in, open the log file. In the SonarQube directory there is a folder called logs, in my case it is, sonarqube-5.4\logs. Inside you will find a file called sonar.log. Open it with your editor of choice and search for your domain username. Next to your username (probably at the bottom of the log file) you will find a couple of log lines made by web[o.s.p.l.w.WindowsAuthenticationHelper] and in one of those lines you will find written Groups for the user YOURDOMAIN\YOU and a list of security groups you are part of. Find the correct one and copy it, in my case this is sonar@maiolocal. Now log in as admin and open the Groups screen. Create new group by clicking to the Create Group button in top right corner and set the name to your group of choice, in my case sonar@maiolocal.

Once the group is created, move to Global Permissions screen (always in the Security menu), and assign the desired permissions to just created group. Let’s suppose that this group will list all of the administrators, under Administer System permission, click on groups and select the newly created group.

Now if you close your browser and reopen it pointing to your SonarQube portal, you will get logged in via SSO and you should be able to see the Administration button in the menu. Same can be done for the users.

Conclusion

This is roughly it. There are some details you would probably like to set as SMTP/Email settings and Source Control Manager settings, however all of this is quite trivial as you can find all of the necessary settings in the UI under General Settings. For more details check Notifications – Administration page in SonarQube documentation site, as SCM support page.

Your SonarQube server should now be correctly installed and configured to access LDAP. Ahhh, I almost forgot it, get the logging level back to INFO, otherwise you are risking quite a large log files on your disk.

UPDATE

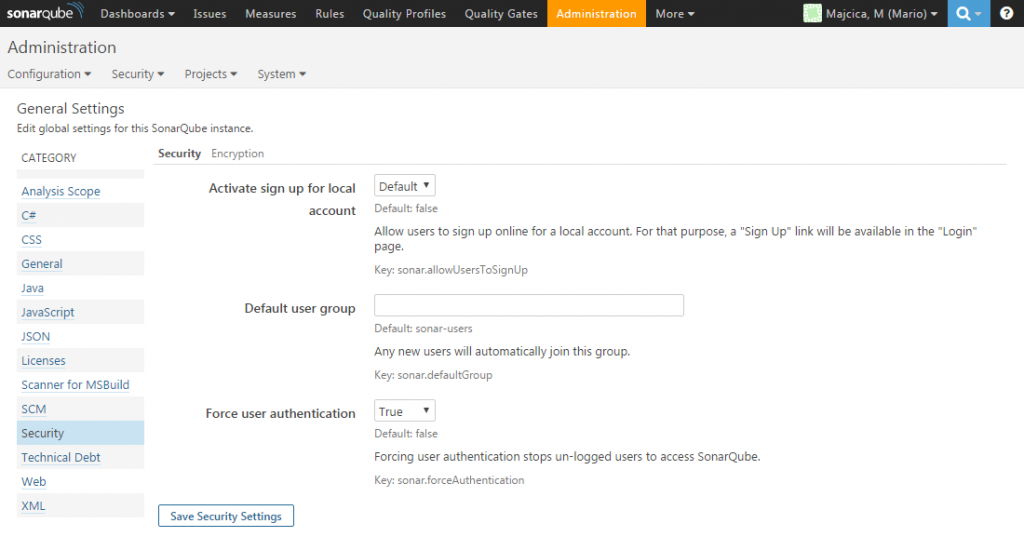

After I published my post I realized that there is a better way of forcing the authentication. As Nicolas Bontoux pointed out this setting should be set in Administration – General Settings – Security pane. Otherwise you do risk encountering a problem during the upgrade of your SonarQube instance to a newer version.

Set the Force user authentication in previously mentioned pane.

Once done, comment or remove the sonar.forceAuthentication=true line from the sonar.properties configuration file and restart your service.

In this way you will not be bothered during your SonarQube updates.

UPDATE 2

In regard to the proxy settings, since SonarQube 5.5 specifying your proxy address via javaAdditionalOpts is not necessary anymore (and it is not advisable). Now it is sufficient to search in the sonar.properties configuration file the following line #http.proxyHost= and set the proxy parameters as shown here:

http.proxyHost=swg.myproxy.com

http.proxyPort=8080

https.proxyHost=swg.myproxy.com

https.proxyPort=8080

Save your settings and restart the service. Your Update Center should still be working correctly.