Signing Git Commits

What does it mean to sign a Git commit and why would you like to do that?

From Latin, signāre, or putting a mark.

As the word itself says, signing, putting a mark, ensures that the commit you made and the code contained can’t be tempered.

Git is cryptographically secure, but it’s not foolproof. In order to ensure the repository integrity, Git can sign tags and commits with a GPG key.

In this post, I’ll show you how to set up all of the necessary toolings in order to be able to sign your git commits. Aside from having the latest version of Git installed, you’ll need also the GnuPG. So let’s start.

GPG Introduction

GnuPG, also known as GPG, is a complete and free implementation of the OpenPGP standard. All of the details about OpenPGP are defined in RFC4880 (also known as PGP).

First of all, you need to download GPG, configure it and create/add your personal key.

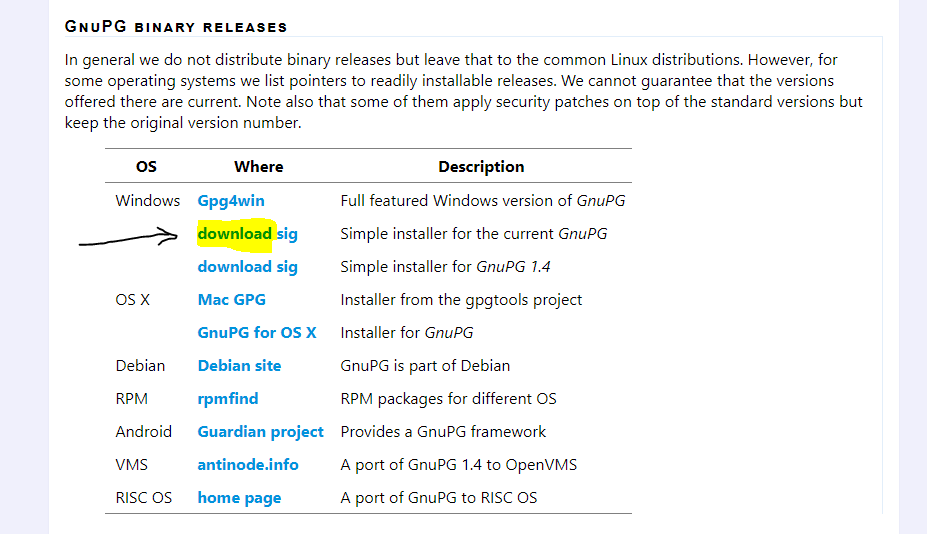

On the following address https://www.gnupg.org/download/index.html and under “GnuPG binary releases” under windows section choose “Simple installer for the current GnuPG” and download the installer.

When downloaded, please install the application. The installation procedure is a simple one as no particular options are available.

Once installed you are ready to create a new key, which is the fundamental thing in getting to sign our commit.

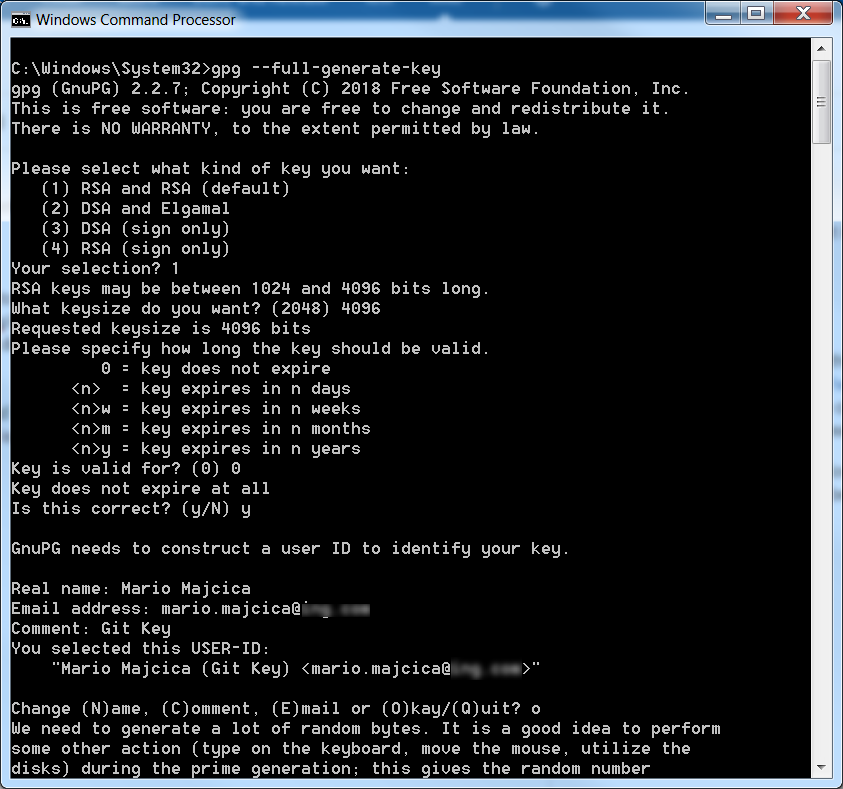

In command prompt issue the following command gpg --full-generate-key At this point, you will be asked several questions you will need to answer before your key is going to be created. Check the following example:

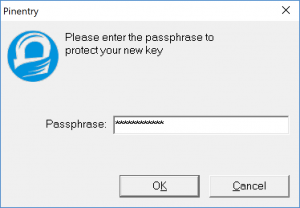

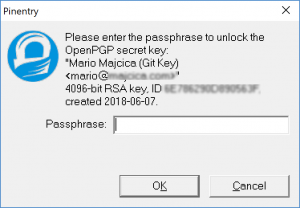

At the end of the process you will be asked, in a pop-up window, for a password that needs to be assigned to this key, please provide one.

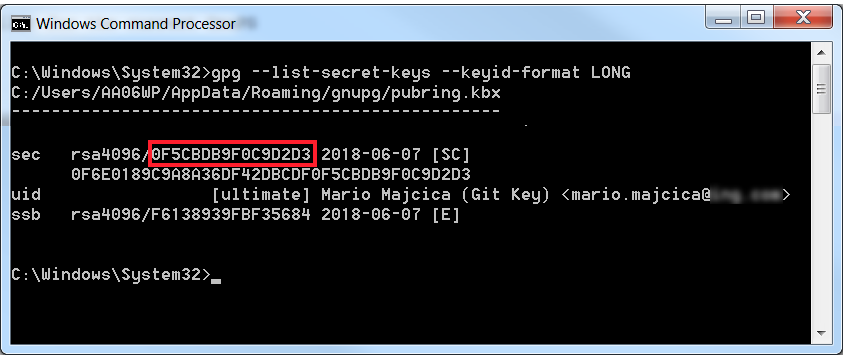

Once the key is created you need to let Git know about it. First issue the following command gpg --list-secret-keys --keyid-format LONG which will list the necessary information about the newly created key. You should see something like this.

Now copy the value that is highlighted in red (key id) and issue the following command git config --global user.signingkey 0F5CBDB9F0C9D2D3 (where 0F5CBDB9F0C9D2D3 is your key id).

This is necessary so that Git knows what key it should use in order to sign your commits.

However, we are still not ready to go and sign our first commit. What we are missing is to set the `gpg.program` setting in our global git config. To do so we first need to retrieve the path of our gpg executable. The easiest way to do so is to run the where gpg command. It will return you the path on where gpg was installed. Now we can set the configuration by running the git config --global gpg.program "C:\Program Files (x86)\GnuPG\bin\gpg.exe" command (obviously in case your path differs from this one, you should adjust it).

Also, before proceeding make sure that the git user.name and user.email are set. In case this was not yet initialized try with, git config --global user.name "Mario Majcica" and git config --global user.email your@yemail.com.

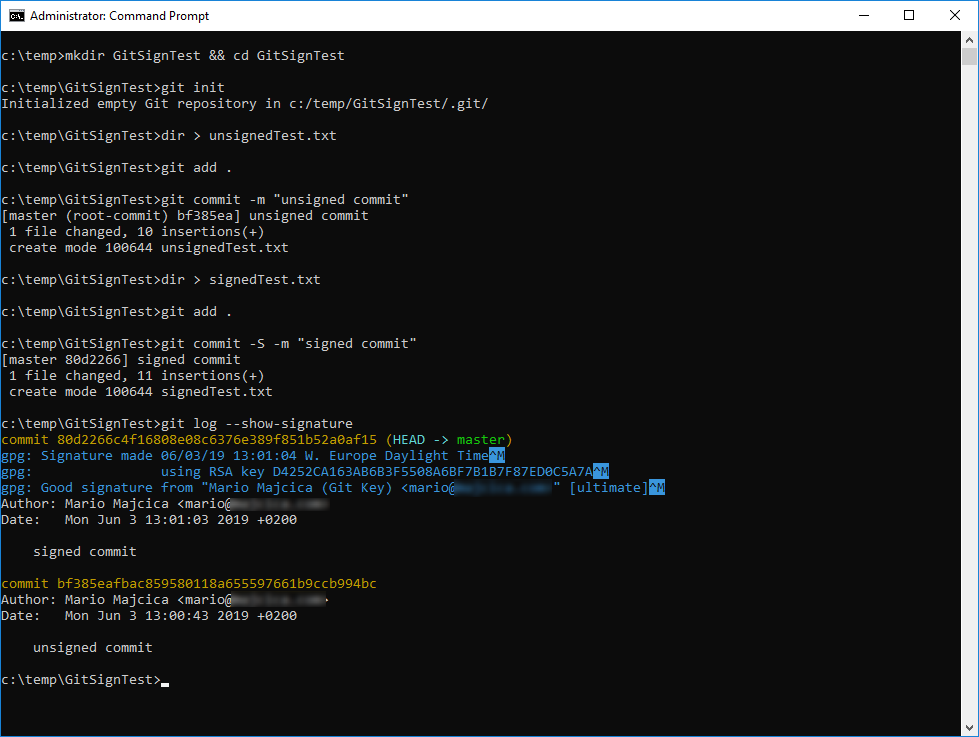

Now we are ready to sign our first commit. Initialize a new git repository, add a file and run git commit -S -m "signed commit". At this point you should be prompted for the password of your key, the one you have chosen during the creation of the key itself:

Once you enter your password, your commit will be made and it is going to be signed, e.g.

Let’s now verify that are signature is there. In order to achieve that issue the following command git log --show-signature -1 or a in a more kind of overview printout git log --pretty="format:%h %G? %aN %s".

You can learn more about Git and the available command regard signing commits here https://git-scm.com/book/en/v2/Git-Tools-Signing-Your-Work.

Export/Import

Next step is to export our key. Why would we do that? Well, an example, so that you can import it on another machine of yours, or import it to services like Github who can then validate your signature.

Let’s first export our public key. To do so, use the following command: gpg --export -a 0F5CBDB9F0C9D2D3> publicKey.asc

Obviously, 0F5CBDB9F0C9D2D3 is my key id in this case, sobstitute this value with your key id.

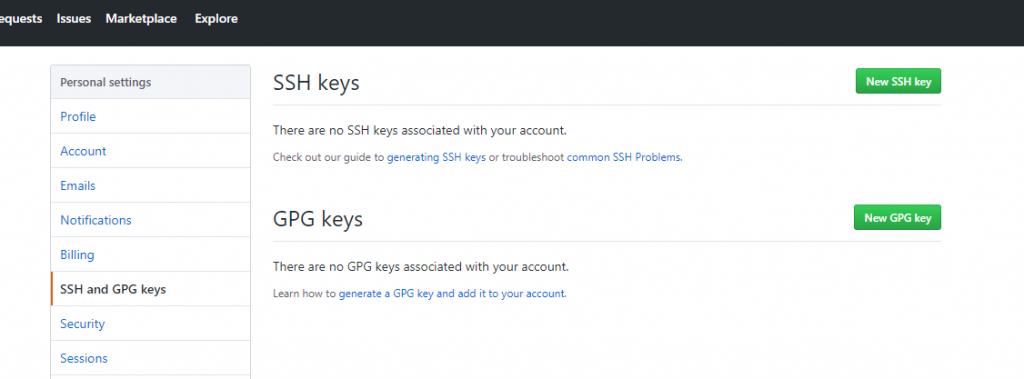

This command will create a file called publicKey.asc in the current folder of yours. Edit this file with a text editor of choice. The content of it will be necessary information for your Github account. Now open your Github.com page and log in. Under the settings, you will find a menu called “SSH and GPG keys”. Open this menu then choose “New GPG Key”:

Now, copy the content of publicKey.asc and paste it in the page on GitHub, then just click “Add GPG Key”.

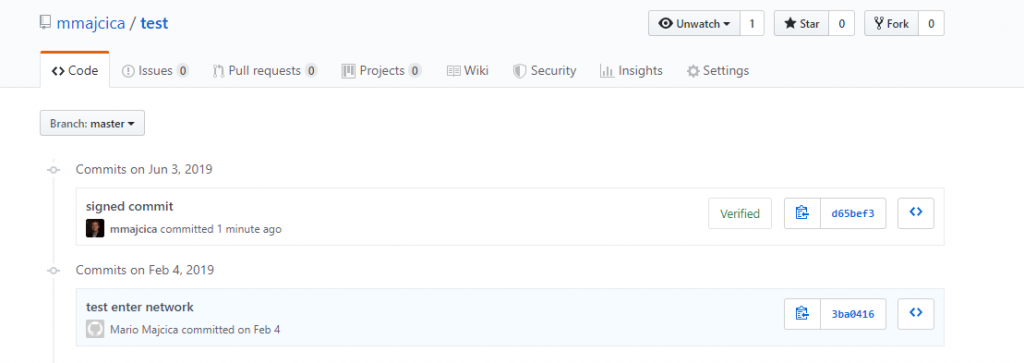

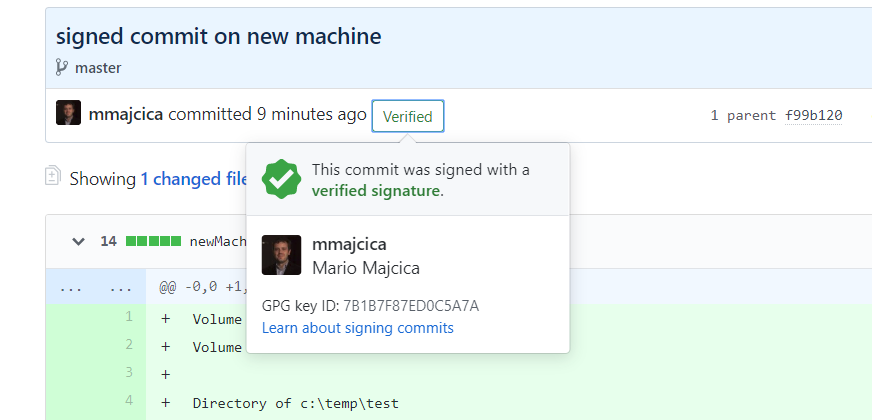

Once done, you should see your new key listed in the GitHub page “SSH and GPG keys” under the GPG Keys. I’ll now edit one of the projects in GitHub and push a signed commit. As you can see, it is now listed that the commit is verified.

In case you click on the Verified icon you will be able to see the details about the signature:

Before we move to the import part, let me show you a trick on how to automate this in a popular IDE, Visual Studio Code.

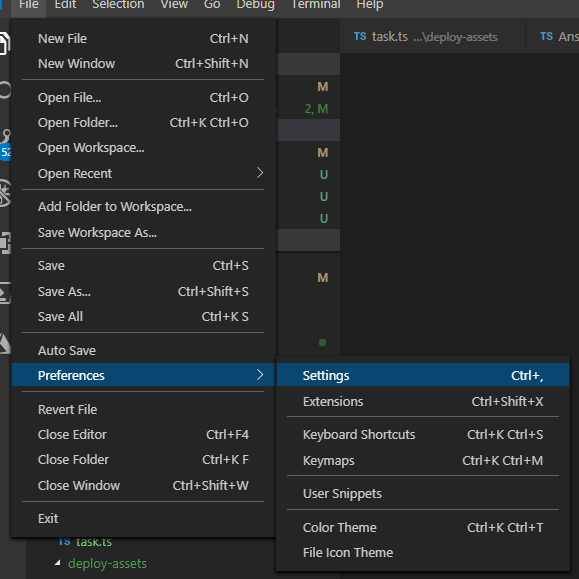

Now that we are all set up, we can instruct Visual Studio Code, to sign the commits that are made from the IDE. To do that, open the settings page in Visual Studio Code

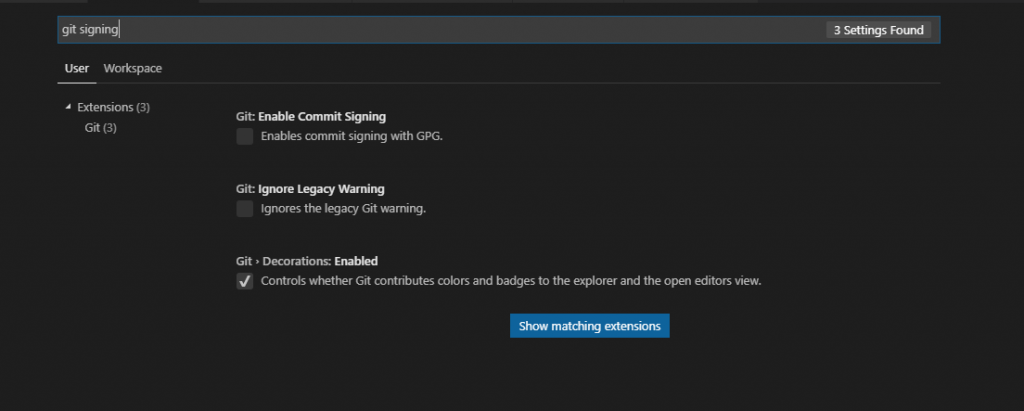

then search for ‘git signing’ and the relevant setting should be listed:

The setting in question is ‘Enable Commit Signing’. Check it, then make a new commit. List your commit log and you’ll see that now also the commits made directly from Visual Studio Code are now signed.

However, the export doesn’t end here. We need to export the private key in order to be able to import it and use it on another machine. To do so run the following command, gpg --export-secret-keys -a 0F5CBDB9F0C9D2D3 > privateKey.asc (where 0F5CBDB9F0C9D2D3 should be your key id). Store this file carefully and do not expose it to the public. It is protected by the password, still, however, in this case, the password itself becomes the weak link.

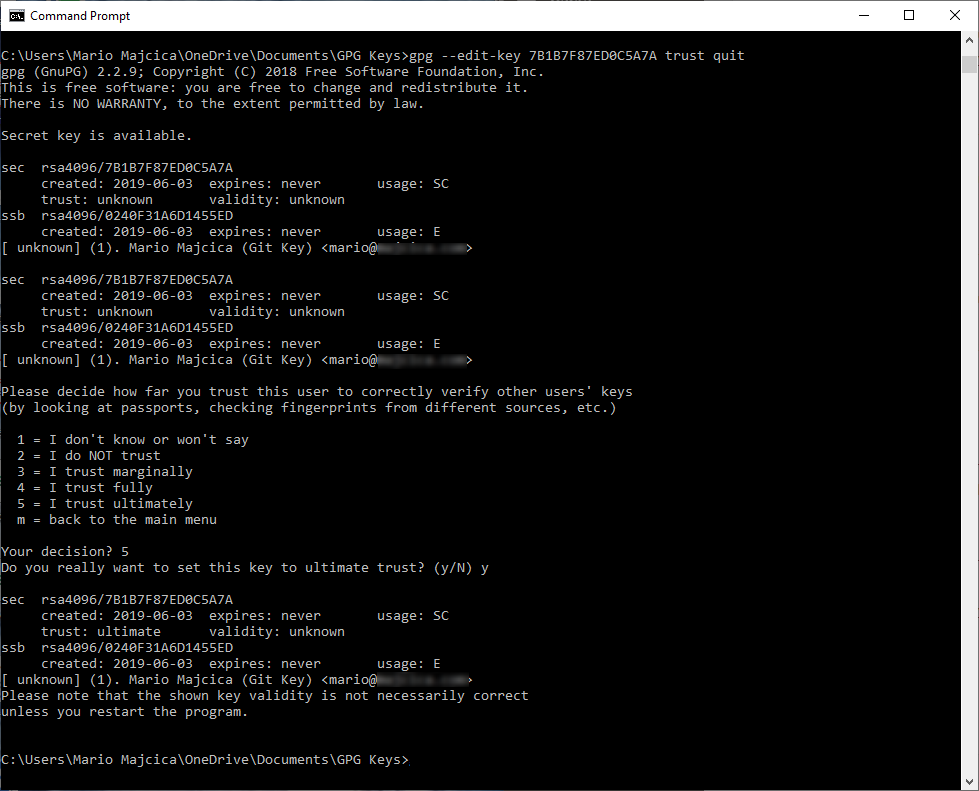

It is now time to import it. For that, it is sufficient to issue the following command gpg --import privateKey.asc. You do not need to import the public key, the private key always contains the public key. One last thing, if imported on another machine, you need to indicate the level of trust towards the newly imported key. You can easily achieve that with the command gpg --edit-key 0F5CBDB9F0C9D2D3 trust quit where 0F5CBDB9F0C9D2D3 is again the key id of the key on that machine. After you issue the command you will see the following screen:

and at this stage, you will be asked for a decision. Hit 5 to indicate you trust ultimately the given key and your job is done.

If the key already existed on the new machine, the import will fail to say ‘Key already known’. You will have to delete both the private and public key first (gpg –delete-keys and gpg –delete-secret-keys).

Conslusion

Aside from the commits, you can also sign tags. If you are not familiar with public key cryptography, check this video on YouTube, it is one of the simplest explanations that I heard.

Some of the useful commands in our case:

gpg --list-keys and gpg --list-secret-keys, both will list your keys, public and private ones and the trust state.

git config --list --show-origin will show you all of the git settings so that you can check if the necessary is already set.

To configure your Git client to sign commits by default for a local repository, in Git versions 2.0.0 and above, run git config commit.gpgsign true. To sign all commits by default in any local repository on your computer, run git config --global commit.gpgsign true.

To store your GPG key passphrase so you don’t have to enter it every time you sign a commit, I recommend using Gpg4win.

That’s all folks, don’t forget to sign your work!