In the recent past I came across several interesting techniques to solve a non every day challenges with TFS. Not only that I would like to share those with you, also I would like to leave a trace about this as a note to my future self. I will list several short tips without a particular order.

Let’s start.

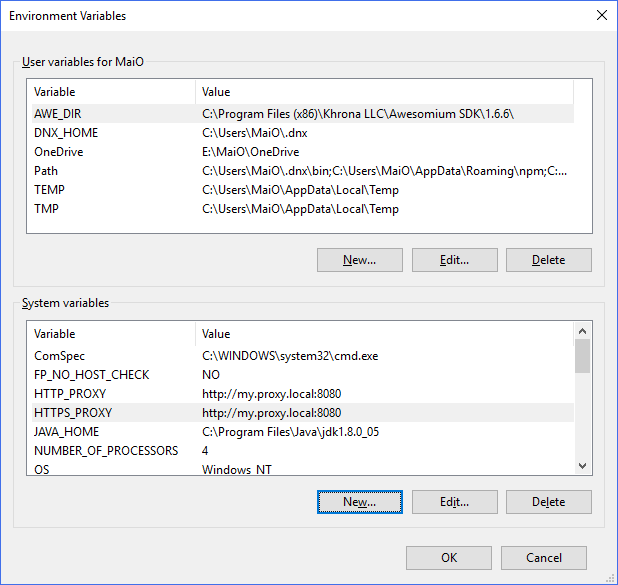

Proxy squared

I already wrote about allowing TFS to get the internet access via a proxy server in one of the past articles, TFS 2015 behind a proxy. It is not a very common situation to have a TFS server behind a proxy still in many enterprises it may be the case. My previous post shows how to let the TFS web application to access the web through proxy for TFS 2015 and it is also valid for the TFS 2017. However I missed to mention that there is another component on the application tier that also needs to be set and that is TFS Job Agent. You may ask yourself, why would TFS Job Agent have a need to access the internet? Well if you are trying to set up a web hook in your service hooks and your system needs to communicate with a machine that is out of your network, then the TFS Job Agent needs to be able to do so as it is him that actually sends the request generated by the chosen event. Luckily things are quite simple, move to the C:\Program Files\Microsoft Team Foundation Server 15.0\Application Tier\TFSJobAgent folder and open the TfsJobAgent.exe.config file. The following section needs to be added pointing to your proxy server

</configuration>

...

<system.net>

<defaultProxy>

<proxy usesystemdefault="True" proxyaddress="http://your.proxy.server.com:8080" bypassonlocal="True" />

</defaultProxy>

</system.net>

</configuration>

Once you saved these changes, you need to restart the TFS Job Agent. That can be easily done by executing the following from the command prompt with full administrator permissions:

net stop tfsjobagent

followed by

net start tfsjobagent

Now your web hook requests to an external party should succeed.

Browsing TFS from the AppTier machine fails in: ‘Unauthorized: Logon Failed’ error

In case you are not using the machine name to access your TFS server (by using an CNAME in DNS or accessing it via an A-Record that may lets say point to the NLB Virtual IP) you may discover a strange behavior of you Application tier server once it tries to access the service on the localhost. It is not an issue strictly related to the TFS and it has to do with the loopback check security feature that is designed to help prevent reflection attacks on your computer. You can read more about it at KB896861. This also can present an issue in case of TFS 2015 or earlier once you try to setup the Notification URL. A solution to this issue is quite simple and adding a value in the registry will solve it. The following PowerShell command will do the trick:

New-ItemProperty HKLM:\System\CurrentControlSet\Control\Lsa\MSV1_0 -Name “BackConnectionHostNames” -Value “your.tfs.com”,”tfs.yourcompany.com” -PropertyType multistring

You need to set the Value to the URL you have chosen for your DNS entries. A server may require a restart in order to make the changes effective.

TFS DB’s under a SQL AlwaysOn replica

Obviously this is not a guide on how to setup an AlwaysOn replica on SQL Server and move your databases under the replication. That is a topic for a much longer post. What I would like to show you here, is what is necessary purely on TFS side in order to get your Application Tier to connect via the SQL Availability Group Listener to the cluster.

Following commands will make that happen:

TFSConfig RegisterDB /SQLInstance:TFS_LISTENER,10010 /databaseName:Tfs_Configuration

and

TFSConfig RemapDBs /DatabaseName:TFS_LISTENER,10010;Tfs_Configuration /SQLInstances:TFS_LISTENER,10010 /AnalysisInstance:TFSAS /AnalysisDatabaseName:Tfs_Analysis

TFSConfig command must be run from an elevated Command Prompt, even if the running user has administrative credentials. To open an elevated Command Prompt, click Start, right-click Command Prompt, and then click Run as Administrator.

TFSConfig tool is installed in the Tools directory – by default, this will be

TFS 2017: %programfiles%\Microsoft Team Foundation Server 15.0\ToolsTFS 2015: %programfiles%\Microsoft Team Foundation Server 14.0\ToolsTFS 2013: %programfiles%\Microsoft Team Foundation Server 12.0\ToolsTFS 2012: %programfiles%\Microsoft Team Foundation Server 11.0\ToolsTFS 2010: %programfiles%\Microsoft Team Foundation Server 2010\Tools

With the first command we will update the name of the server that hosts the TFS configuration database. SQL Instance parameter is pointing to the Availability Group Listener and not the actual instance of the server. 10010 is just the port on which the listener will replay (a non standard port in my case). In case you are on a version of TFS prior to 2017 you will need to include the /usesqlalwayson parameter, which on the TFS2017 is not anymore necessary.

For the second command we will redirect team project collection databases to be accessed via the SQL Availability Group Listener. Again if you are not running the TFS 2017 you will need to specify the /usesqlalwayson parameter at the end.

Make sure that you also specify correctly your analysis server as it is not accessible thought the SQL Availability Group Listener.

Once done do some failover tests and verify the correct functioning of your TFS instance.

More information about the TFSConfig tool can be found at Manage TFS server configuration with TFSConfig page.

Restore permissions for project administrators on Service Hooks

In case you upgraded your TFS instance from TFS 2013 or any previous version to TFS 2015/2017 it may happen in certain cases that the Project Administrators, a role which should have rights in creating and editing Service Hooks, will not be in place. You can add these permissions manually or you can use a tool provided by Microsoft to check the current situation and correct it is necessary. I made some changes to this tool giving you the opportunity to do that for all of the projects and collections on your instance. A fork of the tool can be found on GitHub at https://github.com/mmajcica/vsts-integration-samples.

Once you have downloaded and compiled the code, just run the command line tool with /Server parameter and specify the full path towards your service, like http://mytfs:8080/tfs. This will be sufficient for it to check and, if necessary, correct the missing rights. Same can be done for only a specific collection by using /collection parameter and passing in the path towards the desired collection, like http://mytfs:8080/tfs/DefaultCollection

Controlling and debugging TFS Jobs from DB

Often when you are checking your jobs and you realize that something went wrong, you need to analyze your issues in detail and retry failing jobs. This is usually done via a web services, however, it can also be done directly by querying the DB. I find the second approach often quicker and easier. Let me show you a couple of tricks and where is the necessary data located.

First thing first. Before we are able to do anything further we need to find the Id of the Job that we are looking to operate with. Let’s assume that I’m looking for ‘Reporting Service Path Rename’ Job, which in my case is failing.

Jobs can be defined in the Collection database as they can be defined in the Tfs_Configuration database. This specific one is on the collection level, so I will execute the following query on the collection DB.

SELECT JobId FROM tbl_JobDefinition WHERE JobName like '%report%'

At this point you should get back the JobId which we will use later for obtaining the execution history and to put a new job in the queue.

In my case the above query returned the following GUID: 6322B69A-04BD-47DF-9390-C3185ED59287

Now, on the Tfs_Configuration database you can now check the state of the above job with the following query:

SELECT * FROM tbl_JobHistory WHERE JobId = '6322B69A-04BD-47DF-9390-C3185ED59287' AND NOT Result = 0 ORDER BY StartTime DESC

This will bring us all of the failed runs for the given job in the chronological order. You can get valuable information from the result of this query. In particular I need to get the information about the JobSource which indicates the collection for which this job is failing

In order to get the collection ID <=> Collection Name mappings, you can check the follwoing table:

SELECT * FROM tbl_ServiceHost ORDER BY Name

Let’s get to the point, lets trigger again the job from my example that was failing. This is the query that will create a new run for the given job:

DECLARE @jobSource UNIQUEIDENTIFIER

DECLARE @jobs typ_GuidInt32Table

SELECT @jobSource = 'C64929FF-9329-4123-BF82-F021DDCBE0C3'

INSERT INTO @jobs VALUES('6322B69A-04BD-47DF-9390-C3185ED59287', '1')

EXEC prc_QueueJobs @jobSource, @jobs, 15, 0

As you can see, we used the job id that we retrieved earlier and the collection for which this is going to be triggered (as it is a collection specific job). Last thing left for us to do is to verify the state of the run and we can do that by checking on the tbl_JobQueue for all of the running jobs:

SELECT * FROM tbl_JobQueue WHERE JobState = '1'

Now that you know the tables and SP’s in play, you can try it and proceed on your own.

Be very careful with modifying TFS DB! It is for sure a non recommended practice! 🙂

Conclusion

These are only some of many issues solved in the past for which I haven’t found a solution by simply asking Google. I hope these information will let you avoid spending hours in finding a valid solution to your TFS challenges.