Passing values between TFS 2015 build steps

Introduction

It may happen that you need to pass or make available a value from one build step in your build definition, to all other build steps that are executed after that one. It is not well documented, but there are two ways of passing values in between build steps.

Both of the approaches I am going to illustrate here are based on setting a variable on the task context. The first task can set a variable, and following tasks are able to use the variable. The variable is exposed to the following tasks as an environment variable. When ‘issecret’ option is set to true, the value of the variable will be saved as secret and masked out from log.

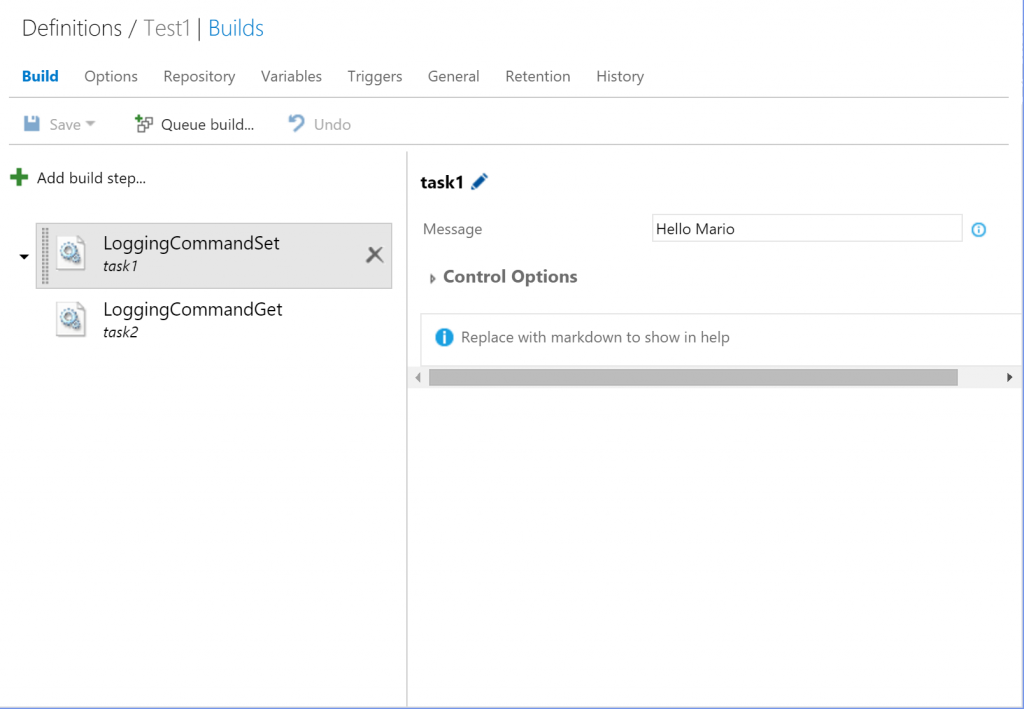

In order to test my approaches, I made 4 build tasks, two of them for the first approach and two of them for the second one. They look something like this:

You can download all of the examples here.

Task Logging Command

Build task context supports several types of logging commands. Logging command are a facility available in the run context of the build engine and do allow us to perform some particular actions. A full list of available logging commands is available here. Be aware that some of them are only available since a certain version of the build agent, and before using them check the minimum agent version for clarity, eventually limit your build task to the indicated version.

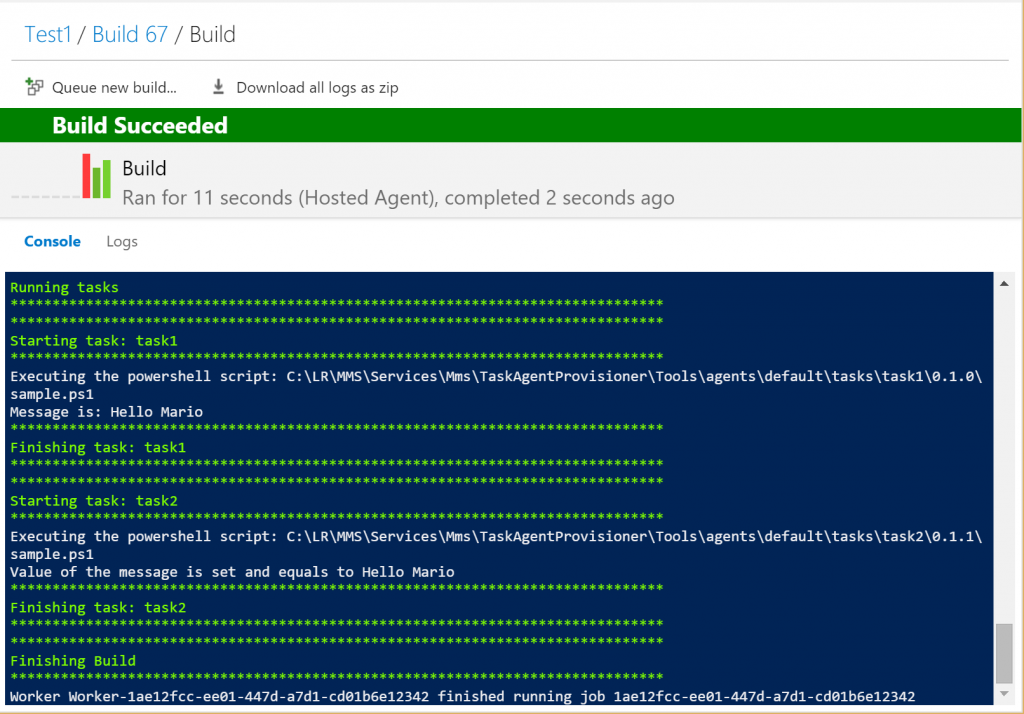

In our example, I will use a variable provided as a parameter to my task with a value set from the UI, print the value out and store it in the task context. This will be my build task called task1. Once I set the task manifest correctly, I will upload it to my on-premise TFS (it will also work on VSTS/VSO). Let’s check the code.

Write-Output "Message is: $msg"

Write-Output ("##vso[task.setvariable variable=task1Msg;]$msg")

Now, I will create another task and call it task2. This task will try to retrieve the value from the task context, then print the value out. Following is the code I’m using.

$task1Msg = $env:task1Msg

if ($task1Msg)

{

Write-Output "Value of the message is set and equals to $task1Msg"

}

else

{

Write-Output "Value of the message is not set."

}

You can find all of the examples I’m using available for download here.

If you now set your build definition with tasks task1 and task2, you will see that based on what you set as a Message in task1, will be visualized in the console by the task2.

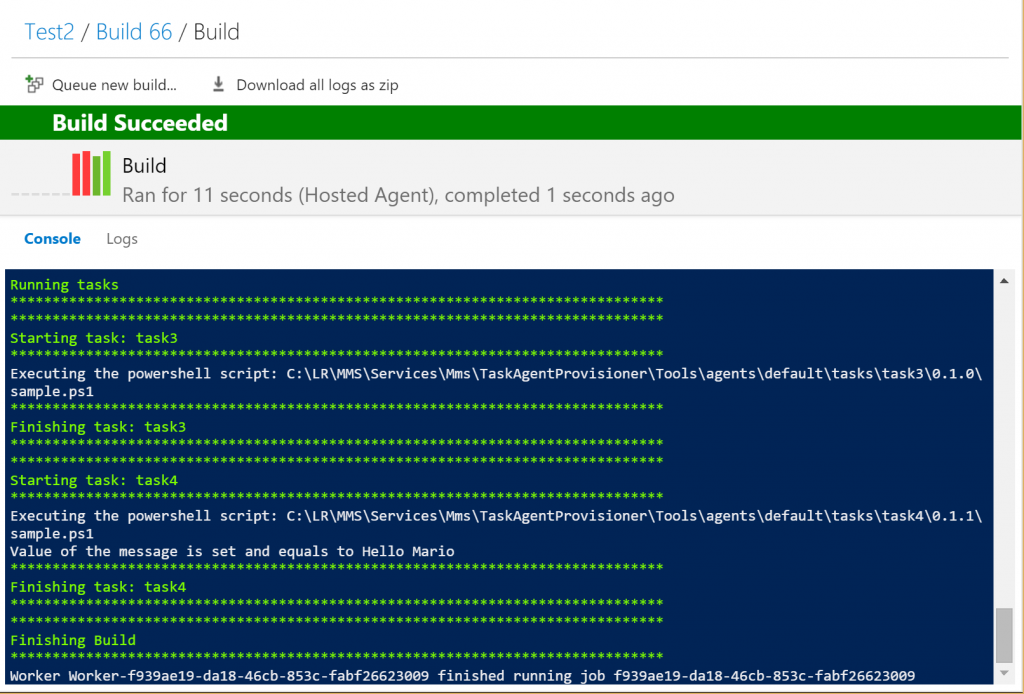

Set-TaskVariable and Get-TaskVariable cmdlets

The same result can be achieved by using some cmdlets that are made available by the build execution context. We are going to create a task called task3 which will have the same functionality as task1, but it will use a different approach to achieve the same. Let’s check the code:

Import-Module "Microsoft.TeamFoundation.DistributedTask.Task.Common"

Set-TaskVariable "task1Msg" $msg

As you can see we are importing modules from a specific library called Microsoft.TeamFoundation.DistributedTask.Task.Common. You can read more about the available modules and exposed cmdlets in my post called Available modules for TFS 2015 build tasks.

After that we are able to invoke the Set-TaskVariable cmdlet and pass the variable name and value as parameters. This will do the job.

In order to retrieve task we need to import another module which is Microsoft.TeamFoundation.DistributedTask.Task.Internal. Although those are quite connected actions, for some reason Microsoft decided to package them in two different libraries. We are going to retrieve our variable value in the following way:

Import-Module "Microsoft.TeamFoundation.DistributedTask.Task.Internal"

$task1Msg = Get-TaskVariable $distributedTaskContext "task1Msg"

if ($task1Msg)

{

Write-Output "Value of the message is set and equals to $task1Msg"

}

else

{

Write-Output "Value of the message is not set."

}

As you can see we are invoking the Get-TaskVariable cmdlet with two parameters. First one is the task context. As for other things, build execution context will be populated with a variable called distributedTaskContext which is of type Microsoft.TeamFoundation.DistributedTask.Agent.Worker.Common.TaskContext and beside other exposes information like ScopeId, RootFolder, ScopeType, etc. Second parameter is the name of the variable we would like to retrieve.

The result still stays the same as you can see from the following screenshot:

Conclusion

Both approaches will get the job done. It is on you to choose the preferred way of storing and retrieving values. A mix of both also works. You need to experiment a bit with the build task context and see what works for you the best. Be aware that this is valid at the moment I’m writing this, and it is working with TFS 2015, TFS 2015.1 and with VSTS as of 2016-02-19. Seems however that in the near future all of this will be heavily refactored by Microsoft, then my guide will not be relevant anymore.

Stay tuned!